Fine-tuning with the kluster.ai platform#

The kluster.ai platform provides a visual, no-code approach to fine-tuning AI models. With an intuitive interface and real-time feedback, you can train customized models without writing a single line of code.

This guide walks you through the platform's fine-tuning workflow, from uploading your training data to deploying your specialized model.

Prerequisites#

Before getting started with fine-tuning, ensure you have the following:

- A kluster.ai account: Sign up on the kluster.ai platform if you don't have one.

- Prepared dataset: You need data formatted according to kluster.ai's requirements for fine-tuning (detailed below).

Supported models#

kluster.ai currently supports fine-tuning for the following models:

Data preparation#

High-quality, well-formatted data is crucial for successful fine-tuning:

- Format: Data must be in JSONL format, where each line is a valid JSON object representing a training example.

- Structure: Each JSON object should contain a

messagesarray with system, user, and assistant messages. -

Example format:

{ "messages": [ { "role": "system", "content": "You are a JSON Generation Specialist. Convert user requests into properly formatted JSON." }, { "role": "user", "content": "Create a configuration for a web application with name 'TaskMaster', version 1.2.0, and environment set to development." }, { "role": "assistant", "content": "{\n \"application\": {\n \"name\": \"TaskMaster\",\n \"version\": \"1.2.0\",\n \"environment\": \"development\"\n }\n}" } ] } -

Quantity: The minimum requirement is 10 examples, but more diverse and high-quality examples yield better results.

- Quality: Ensure your data accurately represents the task you want the model to perform.

Data preparation

For a detailed walkthrough of data preparation, see the Fine-tuning Sentiment Analysis Tutorial.

Find Llama datasets on Hugging Face

There is a wide range of datasets suitable for Llama model fine-tuning on Hugging Face Datasets. Browse trending and community-curated datasets to accelerate your data preparation.

Fine-tuning workflow#

The process of fine-tuning a model using the kluster.ai platform interface involves several key steps which will be described in more detail in the following sections.

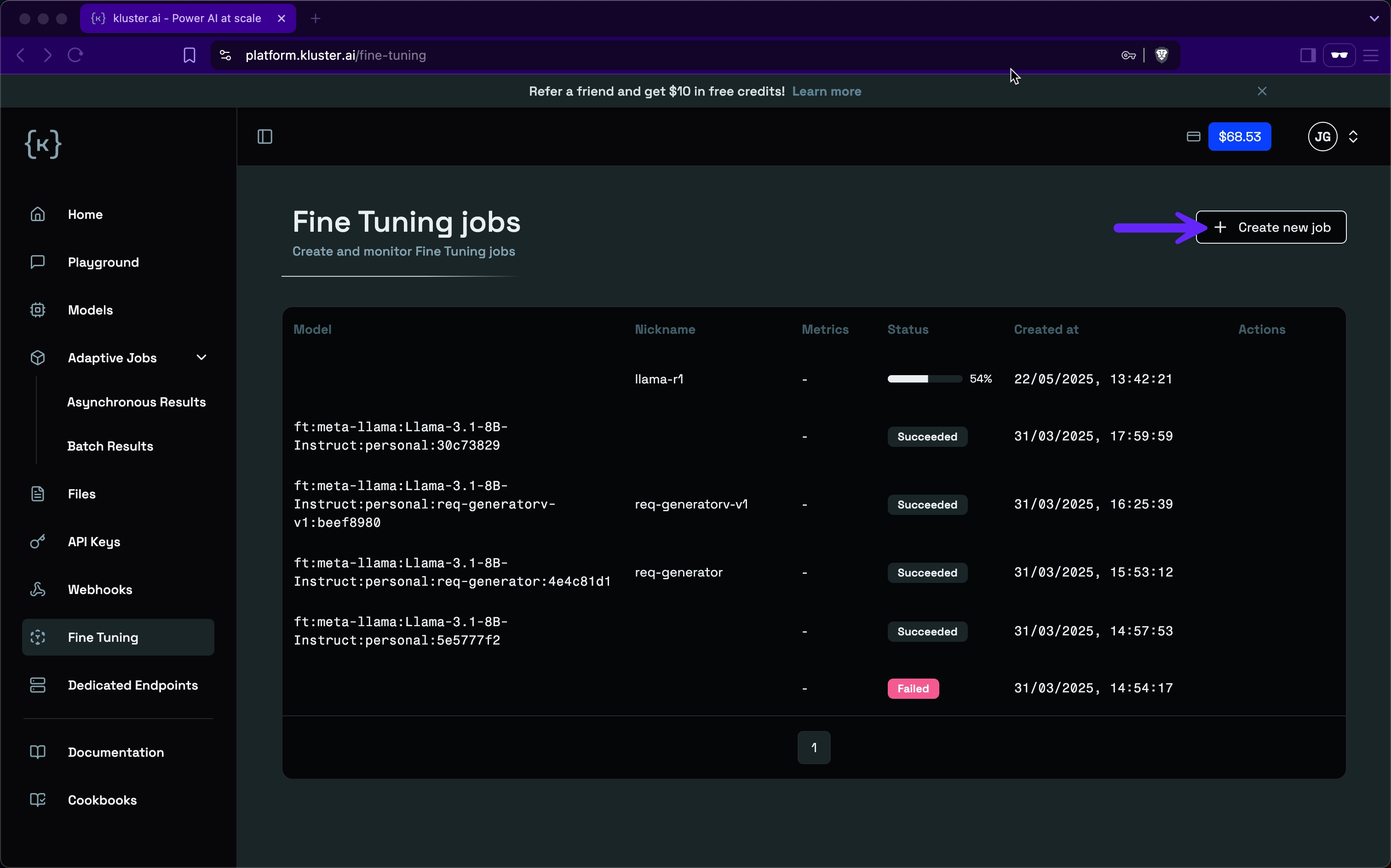

Navigate to the fine-tuning page#

To begin, visit the kluster.ai fine-tuning page and click the Create new job button.

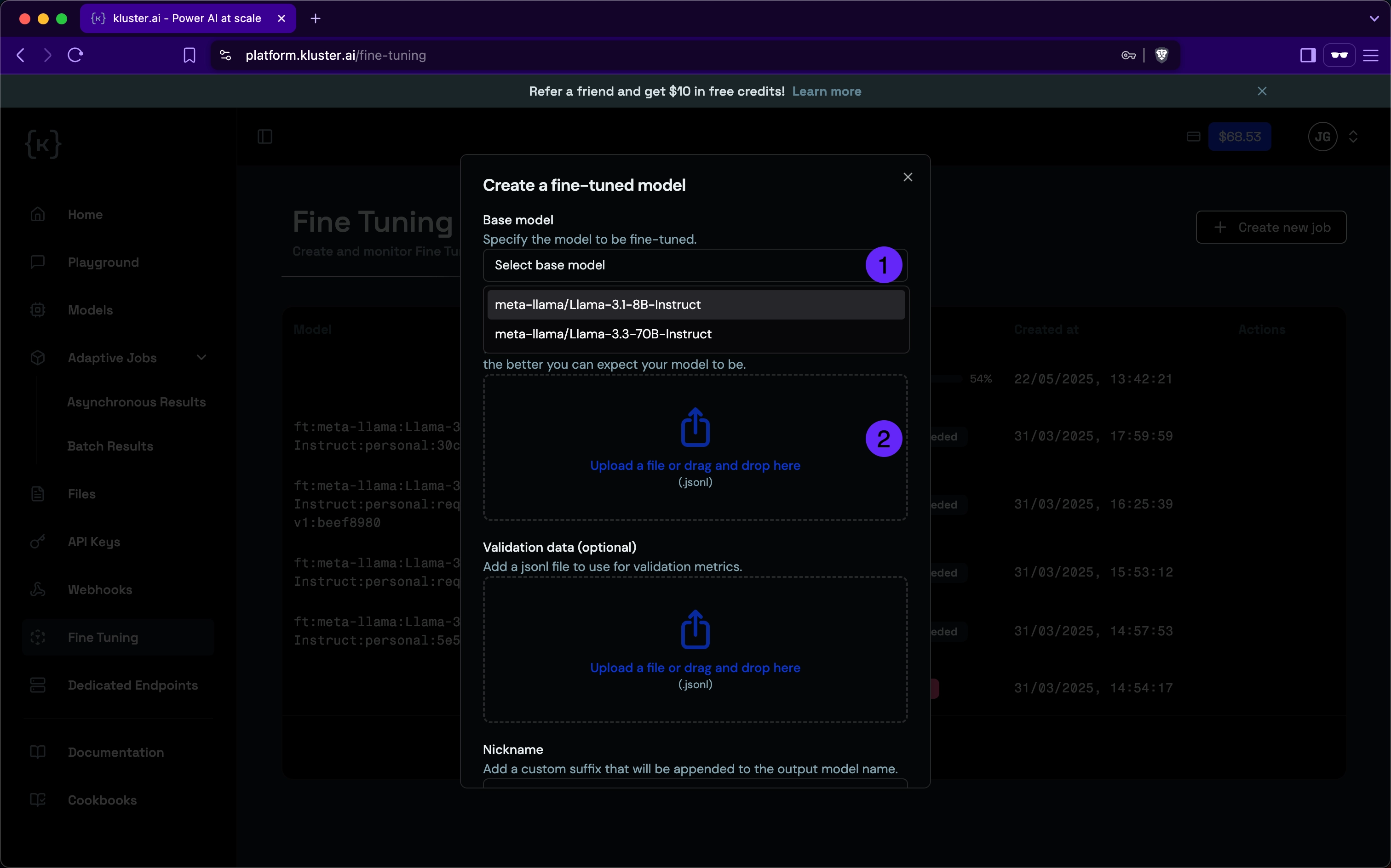

Choose model and upload data#

- Select a base model: Choose one of the available foundation models from the dropdown menu.

- Upload your file: Upload your prepared JSONL training file by dragging and dropping the file or using the file selection dialog.

Validation data

You can optionally upload a validation dataset in the same format as your training data. This helps evaluate the performance of your model during training.

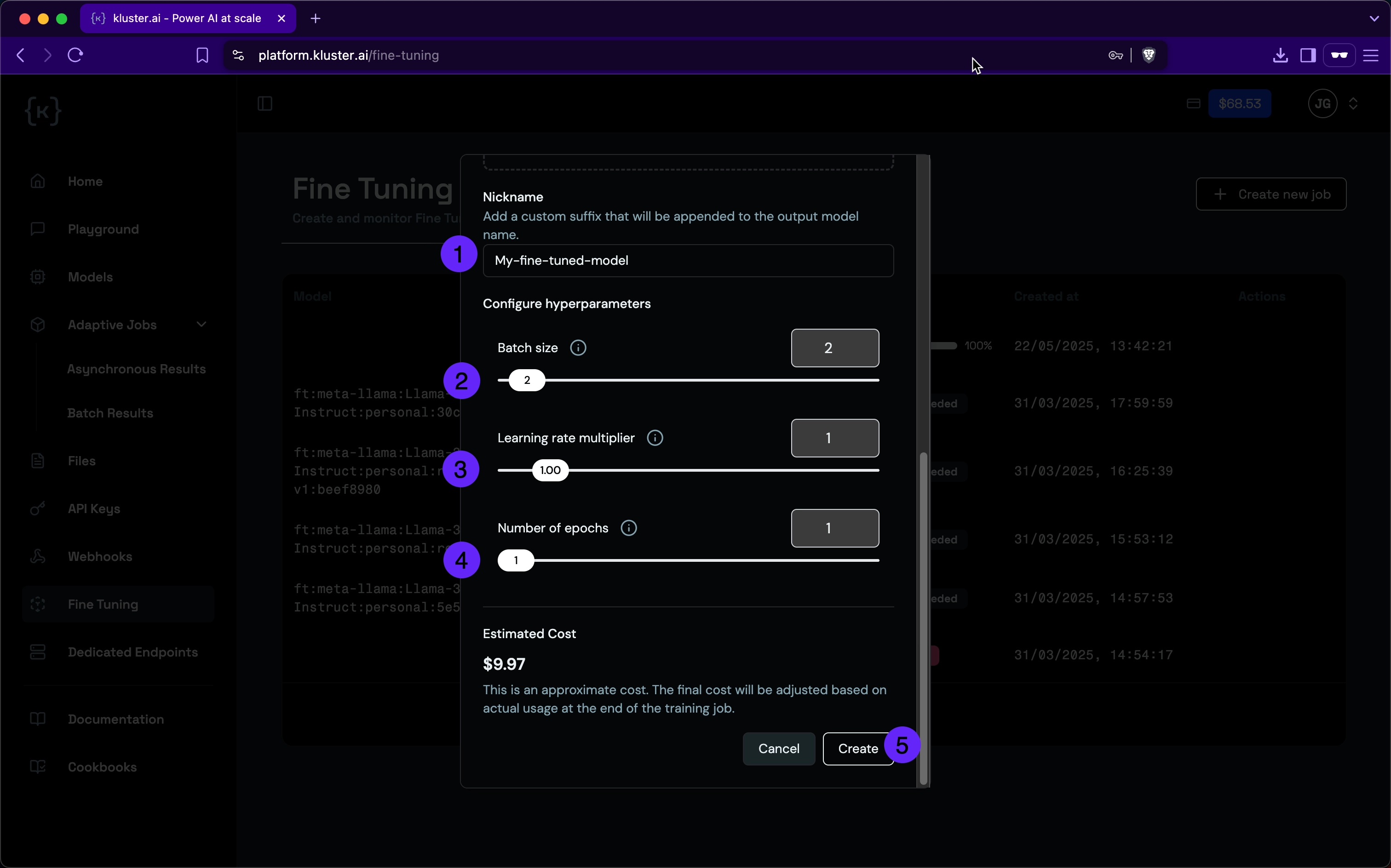

Configure hyperparameters#

Customize your fine-tuning job by configuring these settings:

- Nickname: Add an optional custom suffix that will be appended to your fine-tuned model name.

- Batch size: Control how many examples are processed in each training step.

- Learning rate multiplier: Adjust how quickly the model adapts to your training data.

- Number of epochs: Define how many times the model will cycle through your entire dataset.

- Create: Click the Create button to start the fine-tuning process.

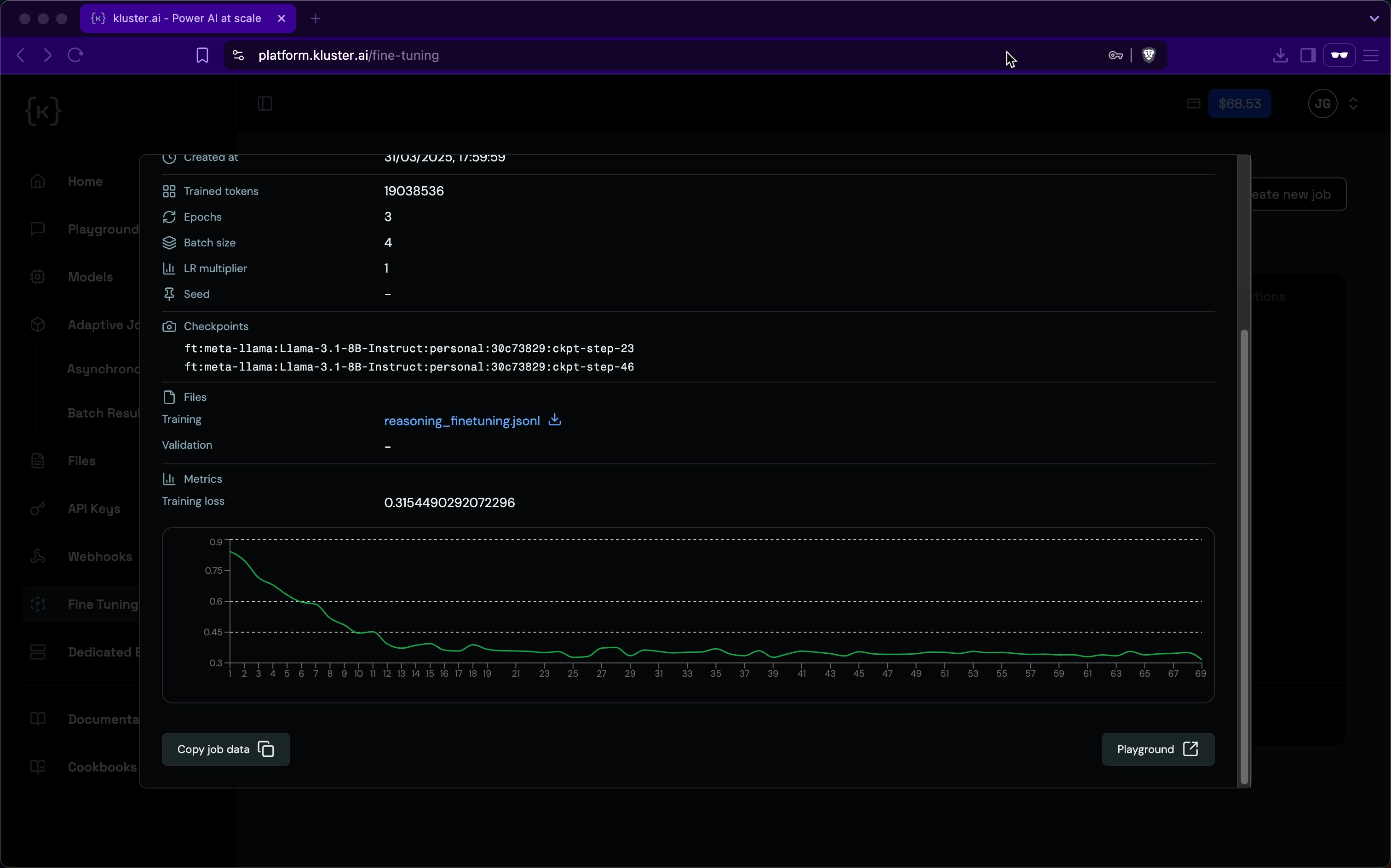

Monitor job progress#

After submitting your fine-tuning job, you can monitor the status and progress of your job on the fine-tuning page.

Each job displays information including:

- Job ID

- Base model

- Training method

- Creation date

- Current status

- Training metrics (when complete)

Status Update

The job status updates will first display "queued," then "running," and "succeeded" when complete.

Access your fine-tuned model#

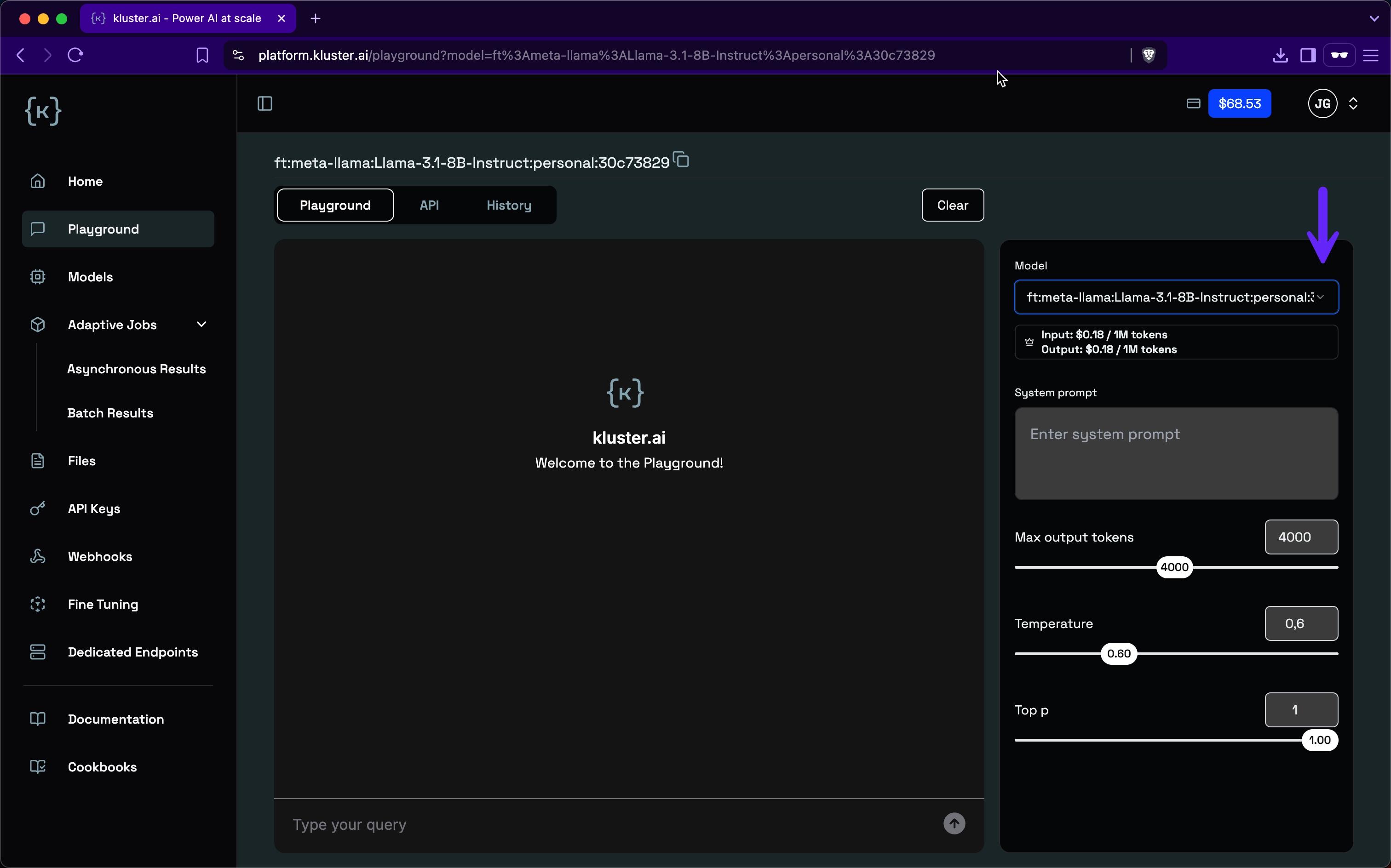

Once fine-tuning is complete, your custom model will be listed on the fine-tuning page with its unique identifier and available in the model selection dropdown in the playground.

Benefits of fine-tuning#

Fine-tuning offers several advantages over using general-purpose models:

- Improved performance: Fine-tuned models often outperform base models on specific tasks.

- Cost efficiency: Smaller fine-tuned models can outperform larger models at a lower cost.

- Reduced latency: Fine-tuned models can deliver faster responses for your applications.

- Consistency: More reliable outputs tailored to your specific task or domain.

Next steps#

- Detailed tutorial: Follow the Fine-tuning sentiment analysis tutorial.

- API reference: Review the API reference documentation for all fine-tuning related endpoints.

- Explore models: See the Models page to check which foundation models support fine-tuning.

- API approach: Learn how to fine-tune models programmatically with the kluster.ai API.